- Power Apps Community

- Welcome to the Community!

- News & Announcements

- Get Help with Power Apps

- Building Power Apps

- Microsoft Dataverse

- AI Builder

- Power Apps Governance and Administering

- Power Apps Pro Dev & ISV

- Connector Development

- Power Query

- GCC, GCCH, DoD - Federal App Makers (FAM)

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read Only)

- Power Platform and Dynamics 365 Integrations (Read Only)

- Community Blog

- Power Apps Community Blog

- Galleries

- Community Connections & How-To Videos

- Copilot Cookbook

- Community App Samples

- Webinars and Video Gallery

- Canvas Apps Components Samples

- Kid Zone

- Emergency Response Gallery

- Events

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Community Engagement

- Community Calls Conversations

- Hack Together: Power Platform AI Global Hack

- Experimental

- Error Handling

- Power Apps Experimental Features

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

- Power Apps Community

- Forums

- Get Help with Power Apps

- Building Power Apps

- Another method for making a collection from Sharep...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

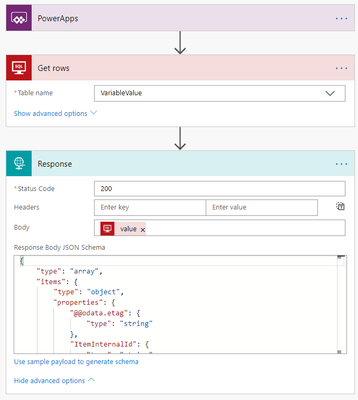

Another method for making a collection from Sharepoint and SQL larger than 2,000 items. Bye-bye delegation!

Maybe I'm slow and just now finding this out, but I thought I would share it with you. I have been working with some data sets in SQL and lists in Sharepoint that exceed 2,000 items. There is one method I summarized a while back that provided a method to get up to 4,000 items. Via flow, I have found a way to collect many more items. I have sucessfully retrieved a SQL table of over 20,000 rows with this method. This also works with sharepoint, but it is much slower and has some other hangups. I'll try to outline them here.

The basic method is to create a JSON array from the data, and the pass that back to Powerapps via a Respond Action. This is how it works:

Sample flow, "GetSQLData"

1. The entire flow is only three steps:

2. You go into the "Settings" in the Get Rows action... and turn on Pagination, and set a max number in the Limit field.

3. The trick is the "JSON Schema". You have to run the flow with steps one and two only first. Limit the output to a single row (Set "Top Count" in advanced options to 1. No quotes). Copy (highlight, then Cntrl-V) the output, but ONLY what is in square brackets, including the brackets.

4. Open the Response action. Select "Use sample payload to generate schema", and paste what you copied in 3. Pick done, and the system will generate the schema.

5. In powerapps, use your command in OnStart, or OnVisible:

ClearCollect(TableData,GetSQLData.Run())

6. After this, you should have all of the table data available in your collection. No delegation issues and very fast response. (Dont forget to remove the limit in "Top Count" if you did that!)

Sharepoint modification:

Sharepoint is just a little different animal... You have to remap the tables, since there is something strange in the JSON output of the sharepoint that powerapps just does not like. So, add the Select action. You will have to map each column. KEEP IN MIND, the pitfall note below. If you change anything in the select columns, you will have to make a new scheme. Your load file will come from the Select output when you do a test run, instead of the sharepoint output. You will also have to remove the data source, and re-add the flow in powerapps to reset it.

Accellerating retrieval of thousands of items!

You can increase the speed of retrieval by a factor of how many "lanes" you create in parallel. You basically divide up the indexes into as many ranges as you create "lanes". In this example, I split the index into four ranges, and put them in parallel actions. Yes, this nearly cuts retrieval time to 1/4 the speed of a single range!

Update data after initial load:

You may wish to update your data instead of loading the entire dataset again. This method can take your existing dataset, look for updates from your sources, then update the dataset in your current collections. While the initial load of my data can be nearly 60 seconds, a quick update check and update takes only 6-8 seconds. This allows for your data to not go stale. You can also use timers to refresh data at regular intervals.

// Data Refresh Routine v1.0.0 3/26/2019

UpdateContext({LoadDataSpinner:true,Circle1_Color:Red,Circle2_Color:Red,Circle3_Color:Red});

Concurrent(

Clear(UpdatedFromMDL),Clear(UpdatedAcct),Clear(UpdatedAcctProd),Clear(UpdatedAcctData),Clear(UpdatedMDL),

UpdateContext({TimerReset:false, Circle1_Color:Green})

);

Concurrent(

Refresh('Engineering Change Notice'), Refresh('Engineering Change Request'), Refresh(Drawing_Vault),

If(CountRows(ClearCollect(UpdatedMDL,Filter(SortByColumns(Master_Drawing_List,"Modified",Descending),Modified>LastUpdate)))>0,UpdateContext({No_MDL_Updates:false}),UpdateContext({No_MDL_Updates:true})),

If(CountRows(ClearCollect(UpdatedAcct,Filter(SortByColumns('[dbo].[tbINVTransactionDetail]',"PostedTimestamp",Descending),DateTimeValue(Text(PostedTimestamp))>DateAdd(LastUpdate,-DateDiff(Now(), DateAdd(Now(),TimeZoneOffset(),Minutes),Hours),Hours))))>0,UpdateContext({No_Acct_Updates:false}),UpdateContext({No_Acct_Updates:true})),

If(CountRows(ClearCollect(UpdatedAcctProd,Filter(SortByColumns('[dbo].[VIEW_POWERAPPS_BASIC_ACCTIVATE_DATA]',"UpdatedDate",Descending),DateTimeValue(Text(UpdatedDate))>DateAdd(LastUpdate,-DateDiff(Now(), DateAdd(Now(),TimeZoneOffset(),Minutes),Hours),Hours))))>0,UpdateContext({No_AcctProd_Updates:false}),UpdateContext({No_AcctProd_Updates:true}))

);

Collect(UpdatedAcct,UpdatedAcctProd);

UpdateContext({Circle2_Color:Green});

Concurrent(

ClearCollect(UpdatePartNumbers,UpdatedMDL.MD_PartNumber,RenameColumns(UpdatedAcct,"ProductID","MD_PartNumber").MD_PartNumber,RenameColumns(UpdatedAcctProd,"ProductID","MD_PartNumber").MD_PartNumber),

ForAll(UpdatedAcct.ProductID,Collect(UpdatedAcctData,LookUp(RenameColumns('[dbo].[VIEW_POWERAPPS_BASIC_ACCTIVATE_DATA]',"ProductID","Acct_PartNumber"),Acct_PartNumber=ProductID)))

);

UpdateContext({Circle3_Color:Green});

ForAll(Distinct(UpdatePartNumbers,MD_PartNumber).Result,Collect(UpdatedFromMDL,LookUp(Master_Drawing_List,MD_PartNumber=Result)));

ForAll(RenameColumns(UpdatedFromMDL,"MD_PartNumber","MD_PartNumber2"),

Patch(MDL_All,LookUp(MDL_All,MD_PartNumber=MD_PartNumber2),

{

MD_Project: MD_Project,

MD_LatestRevNumber: MD_LatestRevNumber,

MD_EngrgRelDate: MD_EngrgRelDate,

Title: Title,

MD_PDM_Link: MD_PDM_Link,

MD_LinkToDrawing: MD_LinkToDrawing,

MD_IsActive: MD_IsActive,

MD_Data_Flagged: MD_Data_Flagged,

MD_Latest_SW_DocumentID: MD_Latest_SW_DocumentID,

LastCost:LookUp(UpdatedAcctData,Acct_PartNumber=MD_PartNumber2,LastCost),

OnHand: LookUp(UpdatedAcctData,Acct_PartNumber=MD_PartNumber2,OnHand),

Location_Acct: LookUp(UpdatedAcctData,Acct_PartNumber=MD_PartNumber2,Location),

Discontinued_Acct: LookUp(UpdatedAcctData,Acct_PartNumber=MD_PartNumber2,Discontinued),

Available: LookUp(UpdatedAcctData,Acct_PartNumber=MD_PartNumber2,Available)

}

)

);

Set(LastUpdate,Now()-.002);

UpdateContext({TotalDwgCount:CountRows(MDL_All),LoadDataSpinner:false});

ClearCollect(StockQuote,GetKIQStockQuote.Run());

UpdateContext({LastStockUpdate:Now()})

**PITFALLS!!** (PLEASE read to reduce frustrations...)

- Changing anything with the SQL table, or the flow REQUIRES you to disconnect the flow from powerapps (View->DataSources->select flow from list), and reconnect it (Action->Flows->select flow from list). Sometimes, even when you do that its not enough! I had more than one occasion I had to do a "Save As" on the flow to a new name, and add it back fresh into powerapps. If all you get is a "Value" data vale in your collection, then something has gone awry. I spent hours trying to find what the problem was with this.

- The JSON Schema... the "Use Sample payload to generate schema" is NOT perfect!!! If you get a red "Register error" when you try to add your flow to powerapps, check your schema. There can be two problems that I have found.

- The "type" for a column is empty... If you see "{}" after your column name, this is a problem. You need to add it in manually. See two examples...

Copy what is between the curly brackets from another column definition, and paste it in. Be sure the type is correct. Allowable types are "integer", "string", "object","boolean". Date columns are converted to a string. So you have to use DateTimeValue() to convert the text to an object if you need it in that format. - Sometimes, the schema generator interprets the type wrong. Again, if you get the "Register Error" when you add it, then its likely that a "integer" was interpreted as a "string". Again, you will need to update the schema manually if needed.

- The "type" for a column is empty... If you see "{}" after your column name, this is a problem. You need to add it in manually. See two examples...

Flow Execution time for 22,000 rows was a total of 29 seconds. Ten columns of data. I did a test run with a basic table object, and a counter. Powerapps shows the table in just about that amount of time. Not bad if you are in a situation where you can pre-load all of your data. It eliminates delegation issues.

Solved! Go to Solution.

- « Previous

-

- 1

- 2

- Next »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous ,

Where are you getting this error? Are you pushing a flow command from PowerApps? I believe your Flow has 2-3 minutes to run if you are using a Respond action. Let me know how you are doing this.

- « Previous

-

- 1

- 2

- Next »

Helpful resources

Tuesday Tip: Getting Started with Private Messages & Macros

Welcome to TUESDAY TIPS, your weekly connection with the most insightful tips and tricks that empower both newcomers and veterans in the Power Platform Community! Every Tuesday, we bring you a curated selection of the finest advice, distilled from the resources and tools in the Community. Whether you’re a seasoned member or just getting started, Tuesday Tips are the perfect compass guiding you across the dynamic landscape of the Power Platform Community. As our community family expands each week, we revisit our essential tools, tips, and tricks to ensure you’re well-versed in the community’s pulse. Keep an eye on the News & Announcements for your weekly Tuesday Tips—you never know what you may learn! This Week's Tip: Private Messaging & Macros in Power Apps Community Do you want to enhance your communication in the Community and streamline your interactions? One of the best ways to do this is to ensure you are using Private Messaging--and the ever-handy macros that are available to you as a Community member! Our Knowledge Base article about private messaging and macros is the best place to find out more. Check it out today and discover some key tips and tricks when it comes to messages and macros: Private Messaging: Learn how to enable private messages in your community profile and ensure you’re connected with other community membersMacros Explained: Discover the convenience of macros—prewritten text snippets that save time when posting in forums or sending private messagesCreating Macros: Follow simple steps to create your own macros for efficient communication within the Power Apps CommunityUsage Guide: Understand how to apply macros in posts and private messages, enhancing your interaction with the Community For detailed instructions and more information, visit the full page in your community today:Power Apps: Enabling Private Messaging & How to Use Macros (Power Apps)Power Automate: Enabling Private Messaging & How to Use Macros (Power Automate) Copilot Studio: Enabling Private Messaging &How to Use Macros (Copilot Studio) Power Pages: Enabling Private Messaging & How to Use Macros (Power Pages)

April 4th Copilot Studio Coffee Chat | Recording Now Available

Did you miss the Copilot Studio Coffee Chat on April 4th? This exciting and informative session with Dewain Robinson and Gary Pretty is now available to watch in our Community Galleries! This AMA discussed how Copilot Studio is using the conversational AI-powered technology to aid and assist in the building of chatbots. Dewain is a Principal Program Manager with Copilot Studio. Gary is a Principal Program Manager with Copilot Studio and Conversational AI. Both of them had great insights to share with the community and answered some very interesting questions! As part of our ongoing Coffee Chat AMA series, this engaging session gives the Community the unique opportunity to learn more about the latest Power Platform Copilot plans, where we’ll focus, and gain insight into upcoming features. We’re looking forward to hearing from the community at the next AMA, so hang on to your questions! Watch the recording in the Gallery today: April 4th Copilot Studio Coffee Chat AMA

Tuesday Tip: Subscriptions & Notifications

TUESDAY TIPS are our way of communicating helpful things we've learned or shared that have helped members of the Community. Whether you're just getting started or you're a seasoned pro, Tuesday Tips will help you know where to go, what to look for, and navigate your way through the ever-growing--and ever-changing--world of the Power Platform Community! We cover basics about the Community, provide a few "insider tips" to make your experience even better, and share best practices gleaned from our most active community members and Super Users. With so many new Community members joining us each week, we'll also review a few of our "best practices" so you know just "how" the Community works, so make sure to watch the News & Announcements each week for the latest and greatest Tuesday Tips! This Week: All About Subscriptions & Notifications We don't want you to a miss a thing in the Community! The best way to make sure you know what's going on in the News & Announcements, to blogs you follow, or forums and galleries you're interested in is to subscribe! These subscriptions ensure you receive automated messages about the most recent posts and replies. Even better, there are multiple ways you can subscribe to content and boards in the community! (Please note: if you have created an AAD (Azure Active Directory) account you won't be able to receive e-mail notifications.) Subscribing to a Category When you're looking at the entire category, select from the Options drop down and choose Subscribe. You can then choose to Subscribe to all of the boards or select only the boards you want to receive notifications. When you're satisfied with your choices, click Save. Subscribing to a Topic You can also subscribe to a single topic by clicking Subscribe from the Options drop down menu, while you are viewing the topic or in the General board overview, respectively. Subscribing to a Label Find the labels at the bottom left of a post.From a particular post with a label, click on the label to filter by that label. This opens a window containing a list of posts with the label you have selected. Click Subscribe. Note: You can only subscribe to a label at the board level. If you subscribe to a label named 'Copilot' at board #1, it will not automatically subscribe you to an identically named label at board #2. You will have to subscribe twice, once at each board. Bookmarks Just like you can subscribe to topics and categories, you can also bookmark topics and boards from the same menus! Simply go to the Topic Options drop down menu to bookmark a topic or the Options drop down to bookmark a board. The difference between subscribing and bookmarking is that subscriptions provide you with notifications, whereas bookmarks provide you a static way of easily accessing your favorite boards from the My subscriptions area. Managing & Viewing Your Subscriptions & Bookmarks To manage your subscriptions, click on your avatar and select My subscriptions from the drop-down menu. From the Subscriptions & Notifications tab, you can manage your subscriptions, including your e-mail subscription options, your bookmarks, your notification settings, and your email notification format. You can see a list of all your subscriptions and bookmarks and choose which ones to delete, either individually or in bulk, by checking multiple boxes. A Note on Following Friends on Mobile Adding someone as a friend or selecting Follow in the mobile view does not allow you to subscribe to their activity feed. You will merely be able to see your friends’ biography, other personal information, or online status, and send messages more quickly by choosing who to send the message to from a list, as opposed to having to search by username.

Monthly Community User Group Update | April 2024

The monthly Community User Group Update is your resource for discovering User Group meetings and events happening around the world (and virtually), welcoming new User Groups to our Community, and more! Our amazing Community User Groups are an important part of the Power Platform Community, with more than 700 Community User Groups worldwide, we know they're a great way to engage personally, while giving our members a place to learn and grow together. This month, we welcome 3 new User Groups in India, Wales, and Germany, and feature 8 User Group Events across Power Platform and Dynamics 365. Find out more below. New Power Platform User Groups Power Platform Innovators (India) About: Our aim is to foster a collaborative environment where we can share upcoming Power Platform events, best practices, and valuable content related to Power Platform. Whether you’re a seasoned expert or a newcomer looking to learn, this group is for you. Let’s empower each other to achieve more with Power Platform. Join us in shaping the future of digital transformation! Power Platform User Group (Wales) About: A Power Platform User Group in Wales (predominantly based in Cardiff but will look to hold sessions around Wales) to establish a community to share learnings and experience in all parts of the platform. Power Platform User Group (Hannover) About: This group is for anyone who works with the services of Microsoft Power Platform or wants to learn more about it and no-code/low-code. And, of course, Microsoft Copilot application in the Power Platform. New Dynamics365 User Groups Ellucian CRM Recruit UK (United Kingdom) About: A group for United Kingdom universities using Ellucian CRM Recruit to manage their admissions process, to share good practice and resolve issues. Business Central Mexico (Mexico City) About: A place to find documentation, learning resources, and events focused on user needs in Mexico. We meet to discuss and answer questions about the current features in the standard localization that Microsoft provides, and what you only find in third-party locations. In addition, we focus on what's planned for new standard versions, recent legislation requirements, and more. Let's work together to drive request votes for Microsoft for features that aren't currently found—but are indispensable. Dynamics 365 F&O User Group (Dublin) About: The Dynamics 365 F&O User Group - Ireland Chapter meets up in person at least twice yearly in One Microsoft Place Dublin for users to have the opportunity to have conversations on mutual topics, find out what’s new and on the Dynamics 365 FinOps Product Roadmap, get insights from customer and partner experiences, and access to Microsoft subject matter expertise. Upcoming Power Platform Events PAK Time (Power Apps Kwentuhan) 2024 #6 (Phillipines, Online) This is a continuation session of Custom API. Sir Jun Miano will be sharing firsthand experience on setting up custom API and best practices. (April 6, 2024) Power Apps: Creating business applications rapidly (Sydney) At this event, learn how to choose the right app on Power Platform, creating a business application in an hour, and tips for using Copilot AI. While we recommend attending all 6 events in the series, each session is independent of one another, and you can join the topics of your interest. Think of it as a “Hop On, Hop Off” bus! Participation is free, but you need a personal computer (laptop) and we provide the rest. We look forward to seeing you there! (April 11, 2024) April 2024 Cleveland Power Platform User Group (Independence, Ohio) Kickoff the meeting with networking, and then our speaker will share how to create responsive and intuitive Canvas Apps using features like Variables, Search and Filtering. And how PowerFx rich functions and expressions makes configuring those functionalities easier. Bring ideas to discuss and engage with other community members! (April 16, 2024) Dynamics 365 and Power Platform 2024 Wave 1 Release (NYC, Online) This session features Aric Levin, Microsoft Business Applications MVP and Technical Architect at Avanade and Mihir Shah, Global CoC Leader of Microsoft Managed Services at IBM. We will cover some of the new features and enhancements related to the Power Platform, Dataverse, Maker Portal, Unified Interface and the Microsoft First Party Apps (Microsoft Dynamics 365) that were announced in the Microsoft Dynamics 365 and Power Platform 2024 Release Wave 1 Plan. (April 17, 2024) Let’s Explore Copilot Studio Series: Bot Skills to Extend Your Copilots (Makati National Capital Reg... Join us for the second installment of our Let's Explore Copilot Studio Series, focusing on Bot Skills. Learn how to enhance your copilot's abilities to automate tasks within specific topics, from booking appointments to sending emails and managing tasks. Discover the power of Skills in expanding conversational capabilities. (April 30, 2024) Upcoming Dynamics365 Events Leveraging Customer Managed Keys (CMK) in Dynamics 365 (Noida, Uttar Pradesh, Online) This month's featured topic: Leveraging Customer Managed Keys (CMK) in Dynamics 365, with special guest Nitin Jain from Microsoft. We are excited and thankful to him for doing this session. Join us for this online session, which should be helpful to all Dynamics 365 developers, Technical Architects and Enterprise architects who are implementing Dynamics 365 and want to have more control on the security of their data over Microsoft Managed Keys. (April 11, 2024) Stockholm D365 User Group April Meeting (Stockholm) This is a Swedish user group for D365 Finance and Operations, AX2012, CRM, CE, Project Operations, and Power BI. (April 17, 2024) Transportation Management in D365 F&SCM Q&A Session (Toronto, Online) Calling all Toronto UG members and beyond! Join us for an engaging and informative one-hour Q&A session, exclusively focused on Transportation Management System (TMS) within Dynamics 365 F&SCM. Whether you’re a seasoned professional or just curious about TMS, this event is for you. Bring your questions! (April 26, 2024) Leaders, Create Your Events! Leaders of existing User Groups, don’t forget to create your events within the Community platform. By doing so, you’ll enable us to share them in future posts and newsletters. Let’s spread the word and make these gatherings even more impactful! Stay tuned for more updates, inspiring stories, and collaborative opportunities from and for our Community User Groups. P.S. Have an event or success story to share? Reach out to us – we’d love to feature you. Just leave a comment or send a PM here in the Community!

Exclusive LIVE Community Event: Power Apps Copilot Coffee Chat with Copilot Studio Product Team

We have closed kudos on this post at this time. Thank you to everyone who kudo'ed their RSVP--your invitations are coming soon! Miss the window to RSVP? Don't worry--you can catch the recording of the meeting this week in the Community. Details coming soon! ***** It's time for the SECOND Power Apps Copilot Coffee Chat featuring the Copilot Studio product team, which will be held LIVE on April 3, 2024 at 9:30 AM Pacific Daylight Time (PDT). This is an incredible opportunity to connect with members of the Copilot Studio product team and ask them anything about Copilot Studio. We'll share our special guests with you shortly--but we want to encourage to mark your calendars now because you will not want to miss the conversation. This live event will give you the unique opportunity to learn more about Copilot Studio plans, where we’ll focus, and get insight into upcoming features. We’re looking forward to hearing from the community, so bring your questions! TO GET ACCESS TO THIS EXCLUSIVE AMA: Kudo this post to reserve your spot! Reserve your spot now by kudoing this post. Reservations will be prioritized on when your kudo for the post comes through, so don't wait! Click that "kudo button" today. Invitations will be sent on April 2nd.Users posting Kudos after April 2nd. at 9AM PDT may not receive an invitation but will be able to view the session online after conclusion of the event. Give your "kudo" today and mark your calendars for April 3rd, 2024 at 9:30 AM PDT and join us for an engaging and informative session!

Tuesday Tip: Blogging in the Community is a Great Way to Start

TUESDAY TIPS are our way of communicating helpful things we've learned or shared that have helped members of the Community. Whether you're just getting started or you're a seasoned pro, Tuesday Tips will help you know where to go, what to look for, and navigate your way through the ever-growing--and ever-changing--world of the Power Platform Community! We cover basics about the Community, provide a few "insider tips" to make your experience even better, and share best practices gleaned from our most active community members and Super Users. With so many new Community members joining us each week, we'll also review a few of our "best practices" so you know just "how" the Community works, so make sure to watch the News & Announcements each week for the latest and greatest Tuesday Tips! This Week's Topic: Blogging in the Community Are you new to our Communities and feel like you may know a few things to share, but you're not quite ready to start answering questions in the forums? A great place to start is the Community blog! Whether you've been using Power Platform for awhile, or you're new to the low-code revolution, the Community blog is a place for anyone who can write, has some great insight to share, and is willing to commit to posting regularly! In other words, we want YOU to join the Community blog. Why should you consider becoming a blog author? Here are just a few great reasons. 🎉 Learn from Each Other: Our community is like a bustling marketplace of ideas. By sharing your experiences and insights, you contribute to a dynamic ecosystem where makers learn from one another. Your unique perspective matters! Collaborate and Innovate: Imagine a virtual brainstorming session where minds collide, ideas spark, and solutions emerge. That’s what our community blog offers—a platform for collaboration and innovation. Together, we can build something extraordinary. Showcase the Power of Low-Code: You know that feeling when you discover a hidden gem? By writing about your experience with your favorite Power Platform tool, you’re shining a spotlight on its capabilities and real-world applications. It’s like saying, “Hey world, check out this amazing tool!” Earn Trust and Credibility: When you share valuable information, you become a trusted resource. Your fellow community members rely on your tips, tricks, and know-how. It’s like being the go-to friend who always has the best recommendations. Empower Others: By contributing to our community blog, you empower others to level up their skills. Whether it’s a nifty workaround, a time-saving hack, or an aha moment, your words have impact. So grab your keyboard, brew your favorite beverage, and start writing! Your insights matter and your voice counts! With every blog shared in the Community, we all do a better job of tackling complex challenges with gusto. 🚀 Welcome aboard, future blog author! ✍️✏️🌠 Get started blogging across the Power Platform Communities today! Just follow one of the links below to begin your blogging adventure. Power Apps: https://powerusers.microsoft.com/t5/Power-Apps-Community-Blog/bg-p/PowerAppsBlog Power Automate: https://powerusers.microsoft.com/t5/Power-Automate-Community-Blog/bg-p/MPABlog Copilot Studio: https://powerusers.microsoft.com/t5/Copilot-Studio-Community-Blog/bg-p/PVACommunityBlog Power Pages: https://powerusers.microsoft.com/t5/Power-Pages-Community-Blog/bg-p/mpp_blog When you follow the link, look for the Message Admins button like this on the page's right rail, and let us know you're interested. We can't wait to connect with you and help you get started. Thanks for being part of our incredible community--and thanks for becoming part of the community blog!