- Microsoft Power Automate Community

- Welcome to the Community!

- News & Announcements

- Get Help with Power Automate

- General Power Automate Discussion

- Using Connectors

- Building Flows

- Using Flows

- Power Automate Desktop

- Process Mining

- AI Builder

- Power Automate Mobile App

- Translation Quality Feedback

- Connector Development

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read Only)

- Power Platform and Dynamics 365 Integrations (Read Only)

- Galleries

- Community Connections & How-To Videos

- Webinars and Video Gallery

- Power Automate Cookbook

- Events

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Community Blog

- Power Automate Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

- Microsoft Power Automate Community

- Galleries

- Power Automate Cookbook

- Re: Excel Batch Create, Update, and Upsert

Re: Excel Batch Create, Update, and Upsert

06-13-2022 18:35 PM - last edited 06-20-2022 13:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excel Batch Create, Update, and Upsert

Update & Create Excel Records 50-100x Faster

I was able to develop an Office Script to update rows and an Office Scripts to create rows from Power Automate array data. So instead of a flow creating a new action API call for each individual row update or creation, this flow can just send an array of new data and the Office Scripts will match up primary key values, update each row it finds, then create the rows it doesn't find.

And these Scripts do not require manually entering or changing any column names in the Script code.

• In testing for batches of 1000 updates or creates, it's doing ~2000 row updates or creates per minute, 50x faster than the standard Excel create row or update row actions at max 50 concurrency. And it accomplished all the creates or updates with less than 4 actions or only .4% of the standard 1000 action API calls.

• The Run Script code for processing data has 2 modes, the Mode 2 batch method that saves & updates a new instance of the table before posting batches of table ranges back to Excel & the Mode 1 row by row update calling on the Excel table.

The Mode 2 script batch processing method will activate for updates on tables less than 1 million cells. It does encounter more errors with larger tables because it is loading & working with the entire table in memory.

Shoutout to Sudhi Ramamurthy for this great batch processing addition to the template!

Code Write-Up: https://docs.microsoft.com/en-us/office/dev/scripts/resources/samples/write-large-dataset

Video: https://youtu.be/BP9Kp0Ltj7U

The Mode 1 script row by row method will activate for Excel tables with more than 1 million cells. But it is still limited by batch file size so updates on larger tables will need to run with smaller cloud flow batch sizes of less than 1000 in a Do until loop.

The Mode 1 row by row method is also used when the ForceMode1Processing field is set to Yes.

Be aware that some characters in column names, like \ / - _ . : ; ( ) & $ may cause errors when processing the data. Also backslashes \ in the data, which are usually used to escape characters in strings, may cause errors when processing the JSON.

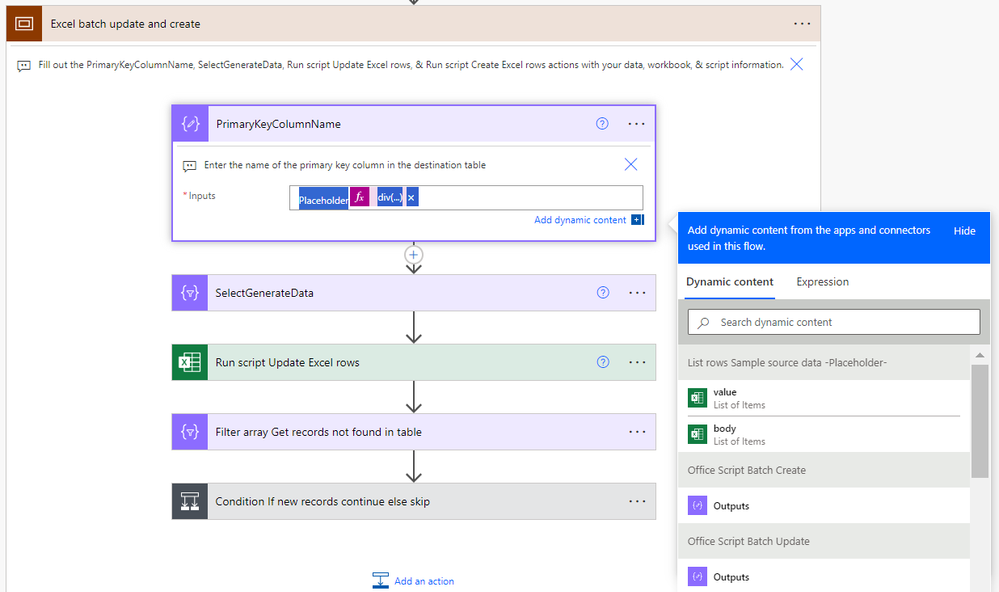

Version 7 Note

Diverting from what is shown in the video, I was able to remove almost all the flow actions in the "Match new and existing data key values then batch update" scope after replicating their functions in the scripts themselves. The flow now goes directly from the "SelectGenerateData" action to the "Run script Update Excel rows" action and the script handles matching up the UpdatedData JSON keys/field names to the destination table headers.

Also version 7 changes the primary key set up in the SelectGenerateData and changes the logic for skipping cell value updates & blanking out cell values.

Now the primary key column name from the destination table must be present in the SelectGenerateData action with the dynamic content for the values you want to match up to update. No more 'PrimaryKey' line, the update script will automatically reference the primary key column in the SelectGenerateData data based on the PrimaryKeyColumnName input on the update script action in the flow.

Now leaving a blank "" in the SelectGenerateData action will make that cell value in the Excel table empty, while leaving a null in the SelectGenerateData action will skip updating / not alter the cell value that currently exists in the Excel table there.

Version 6 which looks closer to the version shown in the video can still be accessed here: https://powerusers.microsoft.com/t5/Power-Automate-Cookbook/Excel-Batch-Create-Update-and-Upsert/m-p...

Version 7 Set-Up Instructions

Go to the bottom of this post & download the BatchExcel_1_0_0_xx.zip file. Go to the Power Apps home page (https://make.powerapps.com/). Select Solutions on the left-side menu, select Import solution, Browse your files & select the BatchExcel_1_0_0_xx.zip file you just downloaded. Then select Next & follow the menu prompts to apply or create the required connections for the solution flows.

Once imported, find the Batch Excel solution in the list of solution & click it to open the solution. Then click on the Excel Batch Upserts V7 item to open the flow. Once inside the flow, delete the PlaceholderValue Delete after import action.

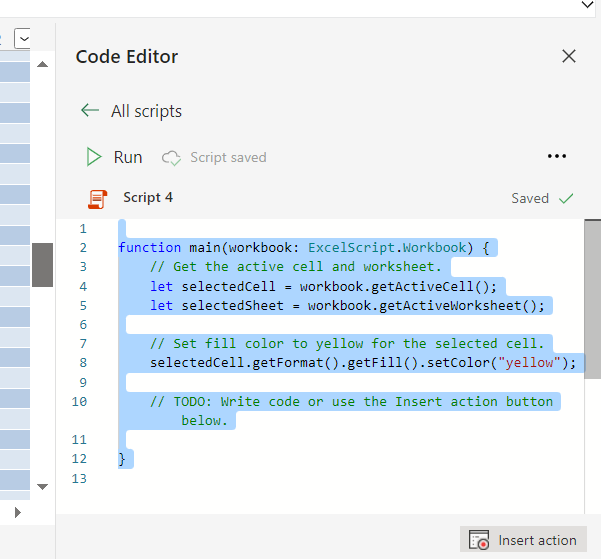

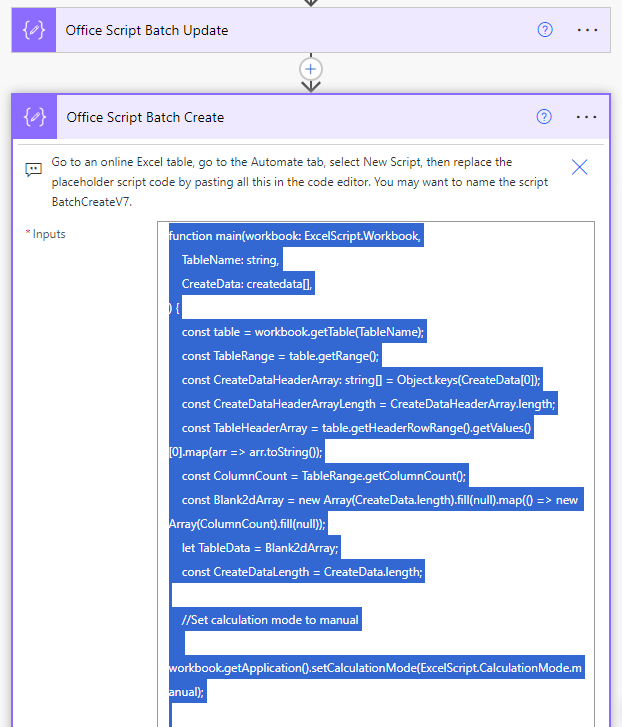

Open the Office Script Batch Update compose action to the BatchUpdateV7 script code. Select everything inside the compose input & control + C copy it to the clipboard.

Then find & open an Excel file in Excel Online. Go to the Automate tab, click on All Scripts & then click on New Script.

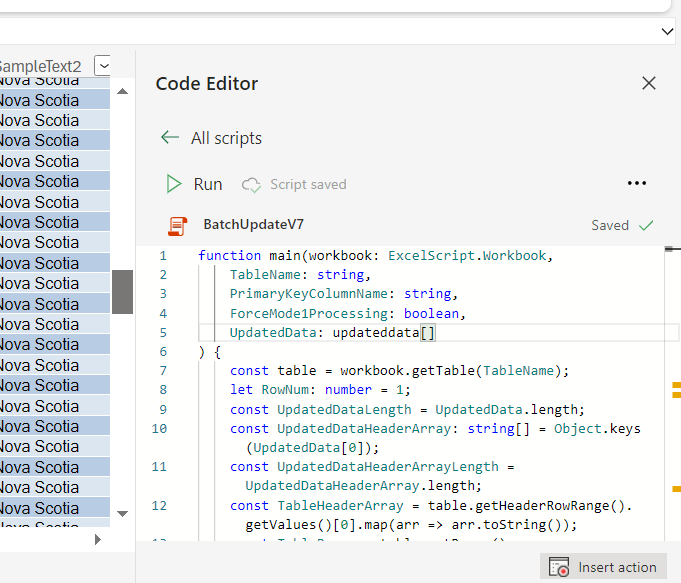

When the new script opens, select everything in the script & control + V paste the BatchUpdateV7 script from the clipboard into the menu. Then rename the script BatchUpdateV7 & save it. That should make the BatchUpdateV7 reference-able in the later Run script flow action.

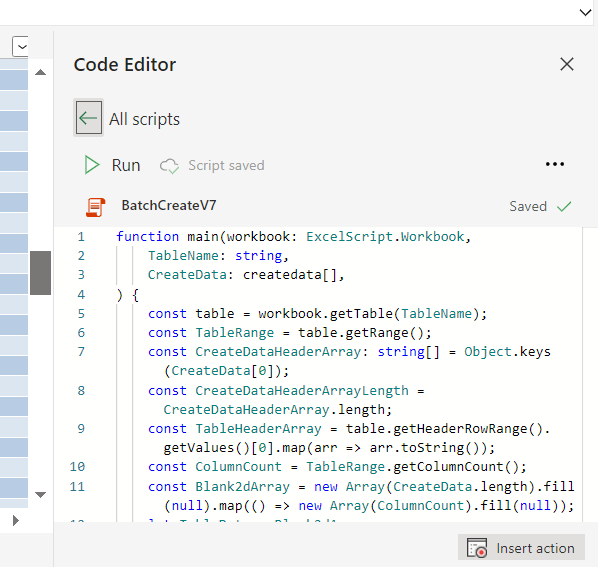

Do the same process to import the BatchCreateV7 script.

Then go to the List rows Sample source data action. If you are going to use an Excel table as the source of updated data, then you can fill in the Location, Document Library, File, & Table information on this action. If you are going to use a different source of updated data like SharePoint, SQL, Dataverse, an API call, etc, then delete the List rows Sample source data placeholder action & insert your new get data action for your other source of updated data.

Following that, go to the Excel batch update & create scope. Open the PrimaryKeyColumnName action, remove the placeholder values in the action input & input the column name of the unique primary key for your destination Excel table. For example, I use ID for the sample data.

Then go to the SelectGenerateData action.

If you replaced the List rows Sample source data action with a new get data action, then you will need to replace the values dynamic content from that sample action with the new values dynamic content of your new get data action (the dynamic content that outputs a JSON array of the updated data).

In either case, you will need to input the table header names from the destination Excel table on the left & the dynamic content for the updated values from the updated source action on the right. You MUST include the column header for the destination primary key column & the the primary key values from the updated source data here for the Update script to match up what rows in the destination table need which updates from the source data. All the other columns are optional / you only need to include them if you want to update their values.

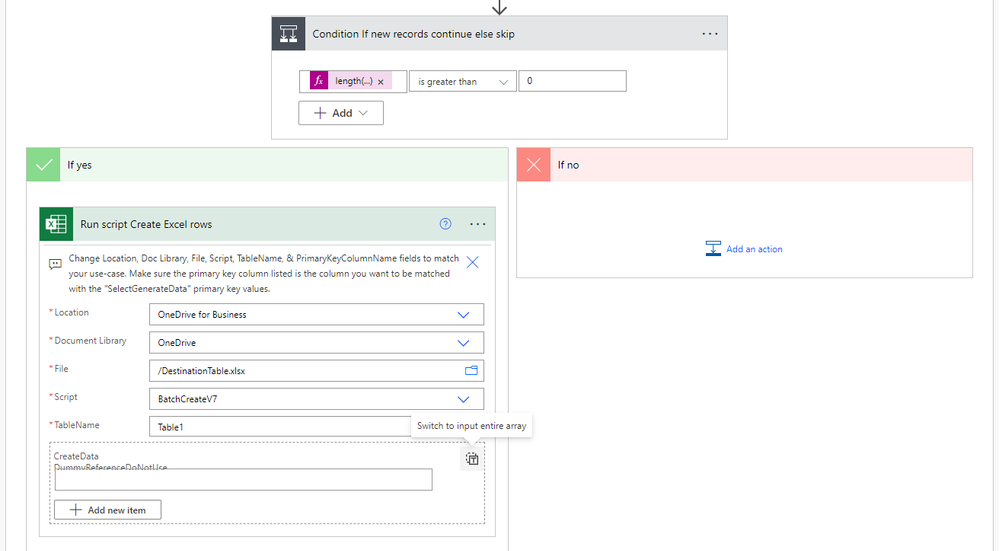

After you have added all the columns & updated data you want to the SelectGenerateData action, then you can move to the Run script Update Excel rows action. Here add the Location, Document Library, File Name, Script, Table Name, Primary Key Column Name, ForceMode1Processing, & UpdatedData. You will likely need to select the right-side button to switch the UpdatedData input to a single array input before inserting the dynamic content for the SelectGenerateData action.

Then open the Condition If new records continue else skip action & open the Run script Create Excel rows action. In this run script action input the Location, Document Library, Script, Table Name, & CreateData. You again will likely need to select the right-side button to change the CreateData input to a single array input before inserting the dynamic content for the Filter array Get records not found in table output.

If you need just a batch update, then you can remove the Filter array Get records not found in table & Run script Create Excel rows actions.

If you need just a batch create, then you can replace the Run script Batch update rows action with the Run script Batch create rows action, delete the update script action, and remove the remaining Filter array Get records not found in table action. Then any updated source data sent to the SelectGenerateData action will just be created, it won't check for rows to update.

Thanks for any feedback,

Please subscribe to my YouTube channel (https://youtube.com/@tylerkolota?si=uEGKko1U8D29CJ86).

And reach out on LinkedIn (https://www.linkedin.com/in/kolota/) if you want to hire me to consult or build more custom Microsoft solutions for you.

Office Script Code

(Also included in a Compose action at the top of the template flow)

Batch Update Script Code: https://drive.google.com/file/d/1kfzd2NX9nr9K8hBcxy60ipryAN4koStw/view?usp=sharing

Batch Create Script Code: https://drive.google.com/file/d/13OeFdl7em8IkXsti45ZK9hqDGE420wE9/view?usp=sharing

(ExcelBatchUpsertV7 is the core piece, ExcelBatchUpsertV7b includes a Do until loop set-up if you plan on updating and/or creating more than 1000 rows on large tables.)

watch?v=HiEU34Ix5gA

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Excel Batch Delete Template is Listed Here:

Excel Batch Delete - Power Platform Community (microsoft.com)

And if you want a work-around trigger for When an Excel row is created, modified, or deleted, check here:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

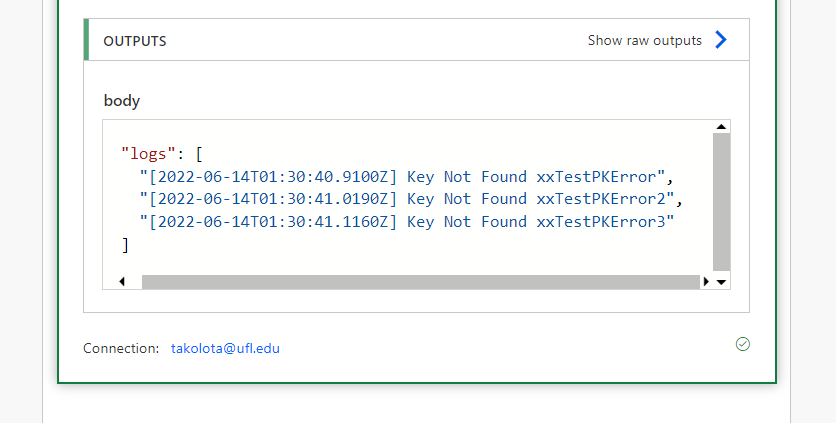

Looks like I forgot to error handle in the Office Script when there is a primary key in the source data that isn't in the destination Excel workbook.

Check & copy over the new Office Script code in the original post that now sends a list of primary keys not found in the Excel table to the Run script output log.

EDIT: Any primary keys not found are now listed in an array in the “results” piece of the Run script outputs. This can then be referenced in any later actions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing the solution @takolota! Performing 10x faster is very impressive!

I'm wondering if tweaking the Office Scripts a little bit would make the solution run even faster -

So instead of reading, finding, and setting table data all inside the nested loops, maybe you can try reading the complete table data into an array in one shot through:

let tableRange = table.getRange();

let tableData = tableRange.getValues();

Then you can do searching and updating all against this array tableData. Hopefully you won't need to call any workbook APIs and can use vanilla string and array methods of JavaScript/TypeScript to search and update content within an array.

And at the end, you can put the array data back into the table in just one shot:

tableRange.setValues(tableData);

The idea here is to avoid accessing workbook data (getColumn, getRange, getRowIndex, getCell, find, etc.) in a loop, because those methods can trigger network calls behind the scenes, which will slow things down especially when dealing with large volume of data. But once you have read the data into the array, all the operations will happen inside memory, which can be a lot faster.

Please refer to this article for more details about Office Scripts performance optimizations: https://docs.microsoft.com/en-us/office/dev/scripts/develop/web-client-performance

Something for you to consider! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Yutao

THANK YOU!

I was reviewing the code and making some minor improvements as you sent that. However I didn't know enough about JavaScript & Office Scripts to know exactly what required a call without looking into it more.

The Run script action just updated 5,000 rows in less than 20 seconds.

Of course, when I tried 50,000 rows the...

So it seems the next part of this may be optimizing the batch size & chunking of updates.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would like this to still work regardless of how large people's tables are in Excel. So the TableRange.setValues(TableData) method of uploading the entire new table from memory will have to wait until I have a better way of batching & chunking those updates like this person does in their video:

(2) Office Scripts: Update large Excel range in performant way - YouTube

I also like that there is less chance of errors with the faster table posting method if someone happens to be working in the table when it gets updated. So this will all have to make it into some V2 later on.

For now, I will leave this version of the Office Script with the TableRange.setValues(TableData) for future reference, and I will be changing some parts of it back.

function main(workbook: ExcelScript.Workbook,

TableName: string,

PrimaryKeyColumnName: string,

UpdatedData: updateddata[],

)

{

let table = workbook.getTable(TableName);

let RowNum: number;

let TableRange = table.getRange()

let TableData = TableRange.getValues()

let ArrayPK = table.getColumn(PrimaryKeyColumnName).getRange().getValues().join("#|#").split("#|#")

let PKValue: string

let ArrayPKErrors = new Array("")

let InitialTableRowNum = TableRange.getRowIndex()

//Iterate through each object item in the array from the flow

for (let i = 0; i < UpdatedData.length; i++)

{

//If the record's Primary Key value is found continue, else post to error log

if (ArrayPK.indexOf(UpdatedData[i].PK) > 0)

{

//Get the row number for the line to update by matching the foreign key from the other datasource to the primary key in Excel

RowNum = ArrayPK.indexOf(UpdatedData[i].PK)

//Iterate through each item or line of the current object

for (let j = 0; j < Object.keys(UpdatedData[i]).length - 1; j++)

{

//Update each value for each item or column given

TableData[RowNum][Number(Object.keys(UpdatedData[i])[j])] = UpdatedData[i][Object.keys(UpdatedData[i])[j]]

}

}

//Post PK not found value to ArrayPKErrors

else {ArrayPKErrors.push(UpdatedData[i].PK)}

}

//Post table in memory to the Excel table

TableRange.setValues(TableData);

console.log("Primary key values not found are listed in the result array")

//Post ArrayPKErrors to flow results

ArrayPKErrors.shift()

return ArrayPKErrors

}

interface updateddata {

'0': string,

'1': string,

'2': string,

'3': string,

'4': string,

'5': string,

'6': string,

'7': string,

'8': string,

'9': string,

'10': string,

'PK': string

}

Thankfully, it is still pretty fast if I do a mix of the original version and of the last version. I can have several operations done completely in memory, then just call on the actual Excel table to update each row. That way it's not referencing the entire table at any point and won't error if it is a large table. Unfortunately that final call to update each row has a size limitation as well when referencing the array sent from Power automate. So the size of the array the flow passes should be set to about 1000 or less. The flow is still able to run each batch of 1000 in less than 20 seconds and we can just use a Do until loop to keep sending batches of 1000. I have updated the original post with this version of the Office Script as it will work for many more use-cases even if it is a little slower.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Yutao

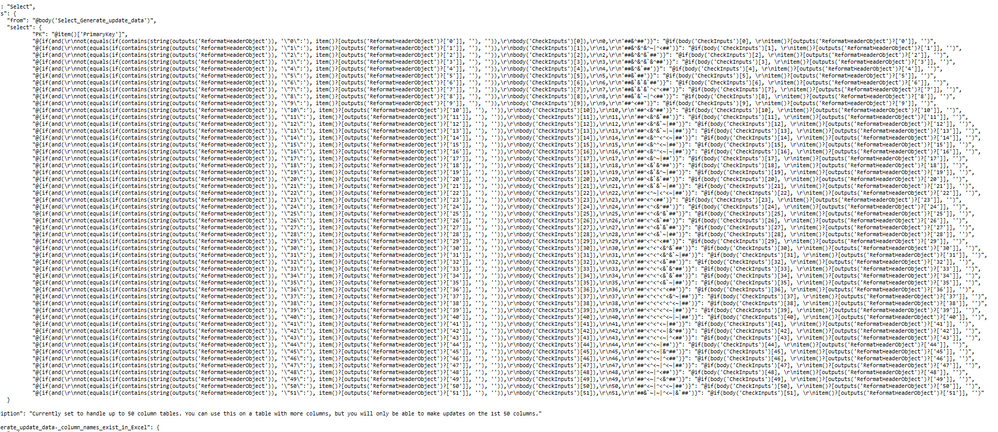

Do you know if many calculations in a Select action are computationally intensive on Microsoft resources? I know it won't affect us customers & our daily action limits or licenses, but I'm just wondering because I could probably rework a bit of this Reformat update for script action to check which columns exist using the 1st Update data JSON object in a compose above the action, then use much simpler expressions in the Select action that will calculate for each update record.

Although, I could probably also adjust this to check for the difference between '' and null values if people want to use a formula in the update data to either explicitly set the updated cell to empty or to not update or change it with the '' value.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I'm not super familiar with the Select operation. Could you please explain a bit what you are trying to achieve with it? It does feel quite overwhelming seeing this 🙀:

Also wondering if this can be done with a script as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Yutao

Yeah, I've cleaned that up a little bit by shifting part of the formulas to another Select action that doesn't have to query a larger array. The special characters are a work-around the JSON requirement for different key label names because I need to be able to not have unused columns in the update data sent to the script. It helps keep the data package small & accomplishes a lot of pre-filtering so the Office Script is more efficient & has more capacity. Even with the work-around it would have been nice to have a Rept() function for strings.

If you have any ideas on something I can set a formula to so it doesn't start a key value pair, that would be great. I could probably combine the entire key & value into a single formula and enter a blank in the alternative mapping pane for the JSON if the column isn't used, then reformat each item into proper JSON. But that really hurts readability. All these ~| &^ &’ ^< special character placeholder labels are replaced & removed in the following action.