- Microsoft Power Automate Community

- Welcome to the Community!

- News & Announcements

- Get Help with Power Automate

- General Power Automate Discussion

- Using Connectors

- Building Flows

- Using Flows

- Power Automate Desktop

- Process Mining

- AI Builder

- Power Automate Mobile App

- Translation Quality Feedback

- Connector Development

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read Only)

- Power Platform and Dynamics 365 Integrations (Read Only)

- Galleries

- Community Connections & How-To Videos

- Webinars and Video Gallery

- Power Automate Cookbook

- Events

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Community Blog

- Power Automate Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

- Microsoft Power Automate Community

- Galleries

- Power Automate Cookbook

- Re: [Guide] Better Import CSV + Convert to JSON (w...

Re: [Guide] Better Import CSV + Convert to JSON (without Plusmail or any additional apps)

06-12-2020 09:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[Guide] Better Import CSV + Convert to JSON (without Plusmail or any additional apps)

Disclaimer, I did not fully write this guide. I found this elsewhere on the internet with intense Google searching. However, the guide there was rather poorly written. I was unable to find the original author, I would like to credit them for such exploration into this black hole.

I played with it for about 5 hours and finally figured it out. It was truly a pain but this is going to help my team process a report that we do weekly from ServiceNow. I hope this solves a lot of headache for some of you.

Please note that the zip file is empty. Follow the guide. I apologize about the formatting as well but the forums did not like how my ordered/unordered lists were setup with code-snippits in the HTML.

Order of Actions

- Manually Trigger Flow

- SharePoint Get file content

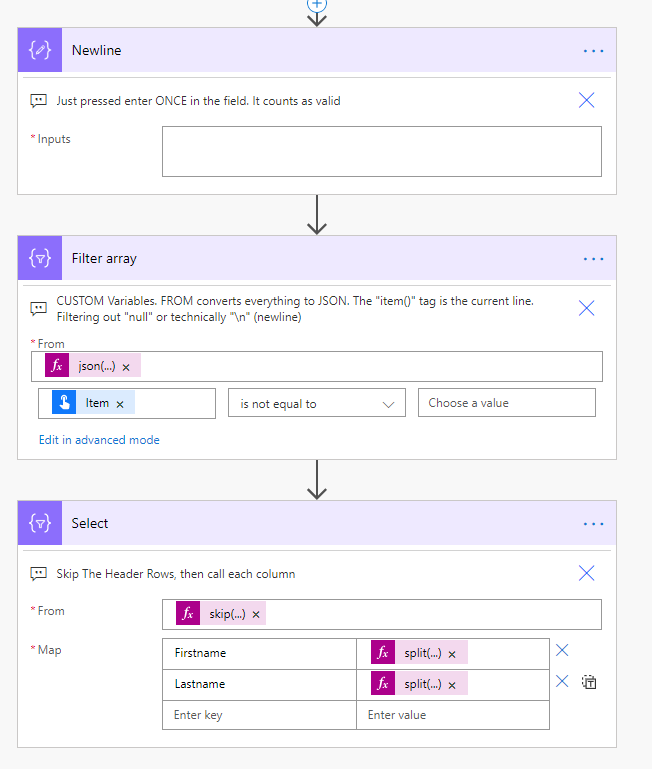

- Compose (just a general compose), press Enter/Return on your keyboard ONCE. This will count as valid

- Filter Array

- Select

- SharePoint - Get File Content

- Pick the Site Address

- Pick the File Identifier of your CSV

- Add a New Action - "Compose" (Data Operation)

- In the INPUT, just hit Enter/Return ONCE, it should just make the box bigger. Nothing else should be here. I was unable to get "\n" or "\r" to work here.

- Click the three dots on the right of the header for this action, rename it to "Newline" --- without quotes and CASE SENSITIVE.

- In the INPUT, just hit Enter/Return ONCE, it should just make the box bigger. Nothing else should be here. I was unable to get "\n" or "\r" to work here.

- Add a New Action - "Filter array" (Data Operation)

- In the From section, click in the box

- The dynamic content/expression pop-up window should appear.

- Click on Expression within the pop-up window

Copy and Paste this into the Expression input (where it says fx) - it might look like it only pasted the last line. Don't worry, it got all of it.

json(

uriComponentToString(

replace(

replace(

uriComponent(

split(

replace(

base64ToString(

body('Get_file_content')?['$content']

),

',',';'),

outputs('Newline')

)

),

'%5Cr',

''),

'%EF%BB%BF',

'')

)

)

- Press OK. If you are unsure if it all pasted, just hover your mouse over the expression inside the "From" field, it should show a little pop-up of the code. Alternatively, you can click the three dots on the upper right hand of the action and "peak code" it should show it all there.

- Click where it says Choose Value

- The Dynamic Content window should pop up again.

- Click on Expression inside the pop up window.

- Type "item()" in the field where it says fx

item()

- Click OK

- Set the logic to "is not equal to"

- Keep the third value completely blank! This will filter out any lines that are blank. If you have blank lines, it will break the flow in the Select action which is next.

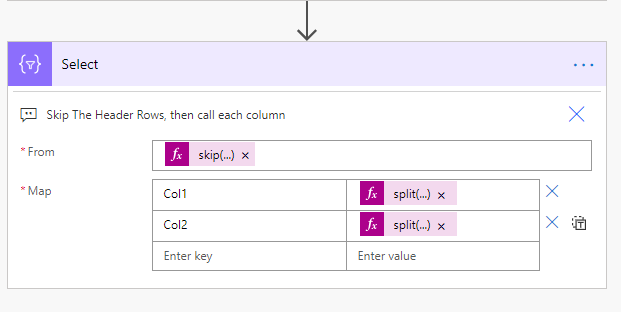

- Add A New Action - "Select" (Data Operation)

- Click into the From field

- The Dynamics and Expression pop-up window should appear.

- Click Expression

- Copy and Paste this in the fx field. This is so that it skips your header rows.

skip(body('Filter_array'),1)

- Click OK

- In the Mapping section, this is where you will set your JSON values. In my case, I uploaded a simple CSV file that has two columns: Firstname and Lastname. So if I wanted the first column:

split(item(),';')[0]

If I wanted to change columns, I would change the 0 to 1... or 2... or 3... etc.

- Each column is represented via the code below:

split(item(),';')[0] #COMMENT: This would be column 1 (remember we start from 0 in programming)

split(item(),';')[1] #COMMENT: This would be column 2

- As for your "Key," you can define that however you like. I like to stick to the column names for clarity.

- The End Result should look something similar to this when testing:

- The Full Picture:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you changed back the ";" did you click update on the expression?

I'd advise directly copying and pasting what I have in the guide. I just ran mine and it worked.

Can you create a new flow with the same steps, and just do it for FirstName,LastName like in the example? Maybe your current CSV data doesn't work with this.

Also, sometimes, when updating the expressions, it will not update even if you click the button. Hover your mouse over the expression to check that it changed.

@ewchris_alaska

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Still no joy. I have no idea what I am doing wrong. below is the failed run and then the code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

UPDATE TO ALL INVOLVED WITH THE THREAD!

I have discovered there is a limit to how large your CSV files can be when using this method. It is an unknown number at this moment but that is why @ewchris_alaska and I were having a hard time diagnosing his issue today.

Now.... I don't know if this is a "cell" limit or a "character" limit. If your CSV file is 700 lines and 5 columns wide or larger, (3500 cells), it did not work for us. Anything below, did work with his cleaned (confidential info that was wiped) data set. It would be interesting if someone could test this out with their own large data sets in CSV format. @prodaptiv-c this may have been slightly related to your issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. This was very useful.

I only had to correct the JSON code a little bit, to exclude the replacement of ',' by ';' because my source file already had ';' by default and some data on it uses ',' in other contexts.

5*

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Has anyone found a way to do this with large CSVs?

Terry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Any updates on processing the large CSV file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

his idea working well, but i don't use the json schema. I'm grabbing the data directly with split(item(),<delimiter>) and then formating it inside the select.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've found this to be the simplest way to parse a CSV, but this code as others have mentioned is still highly dependent on the crazy things CSV's can do. I imagine that's part of why it's a premium feature right now. Anyway, if you're struggling here's some tips I found that finally made this work for me.

- My csv data came from an email attachment. Use the Get attachment built-in functionality to grab the content of this easily.

- My data needed to be pulled from the contentBytes section of the attachment, you can easily target this with outputs('Get_Attachment_(V2)')?['body/contentBytes'] this replaces body('Get_file_content')?['$content'] in the instructions.

- My data had double quotes around it and returns/new lines, this caused the data to fail to properly convert to JSON and I had one big element containing all of my data with lots of double quotes and \'s all over. Check your output and see if there are lots of backslashes and \r\n in yours, as you need to remove them.

Example

["\Name\",\"Email\"\r\n\"Bob Smith\",\"bob.smith@hotmail.com\"\r\n\"]

Instead of

[ "Bob Smith, bob.smith@hotmail.com", ]

To deal with that you need to replace(),'"','') This targets the double quote " and removes it. Look at how the current code replaces commas with semi-colons, you're copying this syntax.

You also need to change the split to deal with those pesky \r\n's. The one included here doesn't do this. And this gets tricky and weird, because of a bug in the way expressions work. To split correctly, you need to do this:

split( ),' < this can be entered in your expression but must have this formatting with the hard return

')

OR

"@split( ),'\r\n')" < this needs to be entered directly into the text box, not as an expression double quotes and everything.

Both of these do the same thing, it took me a long time and lots of research to even find out that this is an issue. But basically if you do a normal split( ), ',') like the original code shows, my CSV added all those nasty \r\n's rather than ignoring them. Using one of the above uses the hard returns as the delimiter instead of the commas. That in combonation with the quote replace cleaned up my csv data well enough to then use this.

Reading this over again it's a bit of a scattershot so I hope someone finds it useful, but I really needed to write this in one place as it was a nightmare to make this work. MS needs to just add a parse CSV option, it seems so basic.